Unraveling the Mysteries of Kernel PCA: A Leap Beyond Conventional PCA

Introduction

Have you ever wondered how to distill complex, high-dimensional data into something more manageable and insightful?

This is where the magic of Principal Component Analysis (PCA) comes into play.

But we're not stopping at traditional PCA; we're diving into the fascinating world of kernel PCA.

In this article, we'll unravel its secrets, showcasing how it surpasses standard PCA in managing non-linear data.

What Is PCA?

PCA, a staple in statistical methods and machine learning, aims to simplify complex datasets while preserving their core information. This is achieved by transforming the data into a new coordinate system, where the variance is maximized along new axes, known as principal components.

Consider a two-dimensional dataset. PCA identifies principal components through two approaches:

Maximum Variance: Maximizes the variance of the data's orthogonal projection onto a lower-dimensional space.

Minimum Error: Minimizes the mean squared error between data points and their projections.

PCA doesn't directly reduce data dimensionality but rather finds new axes for data representation, aiming to enhance separability.

What Is Kernel PCA?

Traditional PCA excels in linear data transformations but falters with complex, nonlinear datasets.

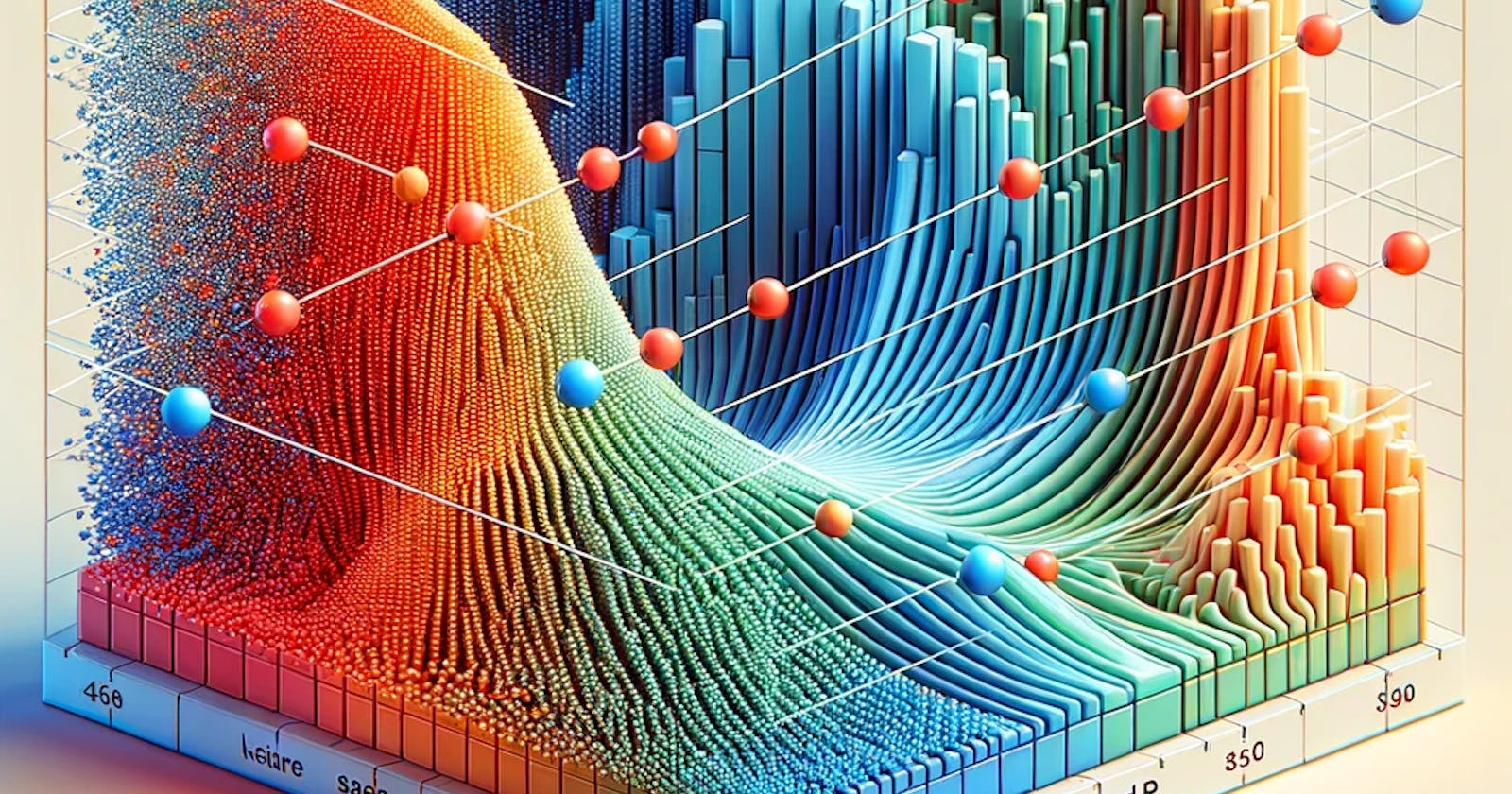

Kernel PCA (KPCA) addresses this by mapping data into a higher-dimensional space, making it linearly separable.

Imagine a two-dimensional dataset with two distinct classes. If this dataset is nonlinear (think of a donut shape with a center circle), standard PCA fails to separate these classes effectively when reducing dimensions.

However, transforming this dataset into a three-dimensional space using a kernel function allows for more effective separation when applying PCA.

Kernel Functions

Kernels transform data into a higher-dimensional space for better separability.

Popular kernel functions include:

Linear

Polynomial

GaussRBF

Sigmoid

Selecting the optimal kernel for a model is often challenging.

A practical approach is to consider the kernel and its parameters as hyperparameters, using cross-validation to determine them alongside other model hyperparameters.

The kernel trick is employed to implicitly map data into a higher-dimensional space, avoiding the computational expense of direct transformation.

Kernel PCA VS Standard PCA

Advantages of Kernel PCA

Higher-dimensional Transformation: By mapping data into a higher-dimensional space, kernel PCA facilitates better class or cluster separation.

Nonlinear Transformation: It adeptly captures complex, nonlinear relationships.

Flexibility: Kernel PCA's ability to detect nonlinear patterns makes it adaptable to various data types, enhancing its utility in fields like image recognition and speech processing.

Advantages of Standard PCA

Computational Efficiency: Standard PCA is more computationally efficient, especially for high-dimensional datasets.

Interpretability: The transformed data is easier to understand and interpret.

Linearity: It excels in capturing linear relationships.

Applications of Kernel PCA

Kernel PCA has diverse applications in fields requiring an understanding of nonlinear data relationships, such as:

Image Recognition: It excels in recognizing nonlinear patterns in image data.

Natural Language Processing (NLP): Useful in text classification, sentiment analysis, and document clustering.

Genomics and Bioinformatics: Assists in analyzing complex gene expression data.

Finance: Applied in financial modeling to understand nonlinear trends in stock prices and financial data.

Conclusion

Kernel PCA transcends the limitations of standard PCA by adeptly handling nonlinear data structures.

Its integration into various domains, from image recognition to genomics, underscores its versatility and power in feature extraction and data preparation in the realm of machine learning.

If you like this article, share it with others ♻️

Would help a lot ❤️

And feel free to follow me for more like this.