Do you want to learn how Convnets works?

Let's use MNIST, the hello world of #deeplearning

Go through the steps of building and train a model.

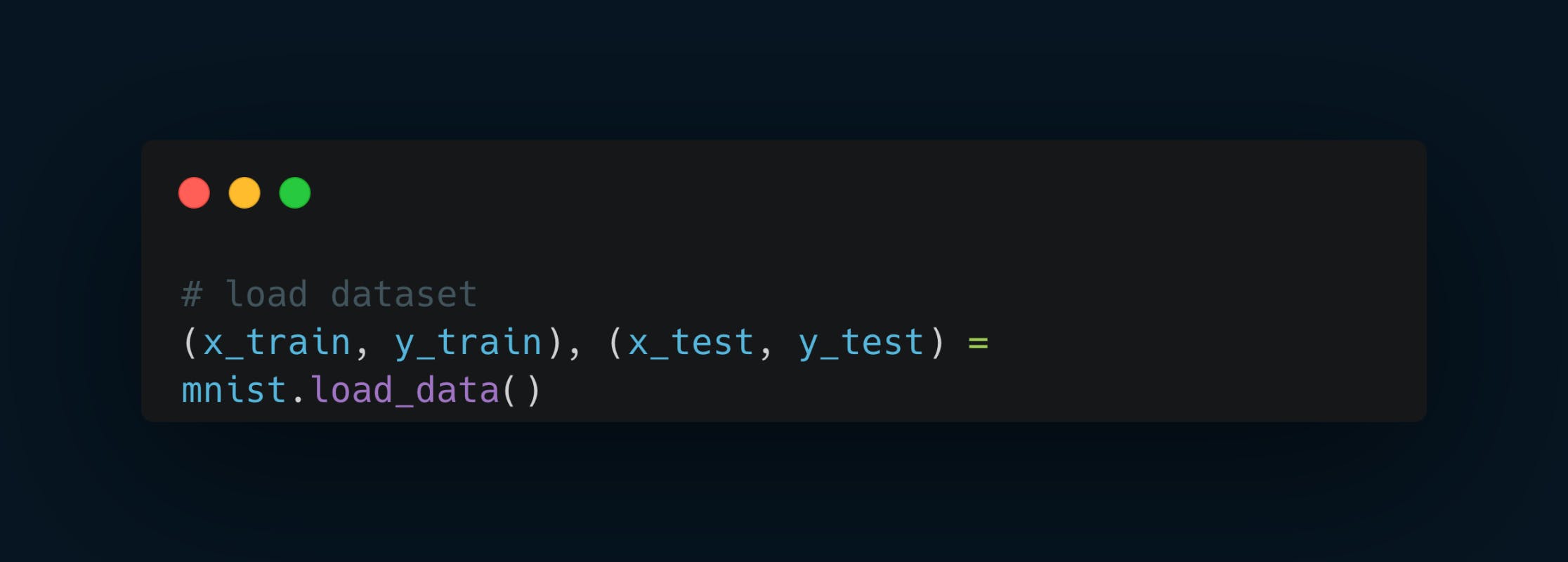

Load dataset

First step is to load the MNIST dataset. It contains 70,000 28x28 images showing handwritten digits.

Load this dataset using Kera. It returns the dataset split into train and test sets.

x_train and x_test contain our train and test images.

y_train and y_test contain the target values: a number between 0 and 9 indicating the digit shown in the corresponding image.

60,000 images to train the model and 10,000 to test it.

Preprocess the data

Then, we need to preprocess dataset.

Each pixel goes from 0 to 255. Neural networks work much better with smaller values.

So, we need to normalize pixels by dividing them by 255. That way, each pixel will go from 0 to 1.

When dealing with images, we need a tensor with 4 dimensions: batch size, width, height, and color channels.

The shape of x_train/y_train are (60000, 28, 28).

So, we need to reshape it to add the missing dimension.

In this case, the image has one channel because images are grayscale.

Target values go from 0 to 9 (the value of each digit.)

We need to one-hot encodes these values, so the neural network makes sense of these categorical values.

For example, a value 5 will turn into an array of zeros with a single 1 corresponding to the fifth position: [0, 0, 0, 0, 0, 1, 0, 0, 0, 0].

Create the model

We need to create our convent model.

A convnet is a sequence of conv2D/MaxPool blocks plus a Dense layer as a classifier in the head

The input shape is a 28x28x1 tensor (width, height, channels).

The Conv2D layer with 32 filters and a 3x3 kernel.

This Conv2D layer will generate 32 different representations or filters using the training images.

Also need to define the activation function used for this layer: ReLU.

Relu will allow us to solve non-linear problems, like recognizing handwritten digits.

After our Conv2D layer, we have a max pooling operation.

The goal of this layer is to downsample the amount of information collected by the convolutional layer.

We want to throw away unimportant details and retain what truly matters.

These conv2D/MaxPool blocks helps the model to extract features and learn more about the image.

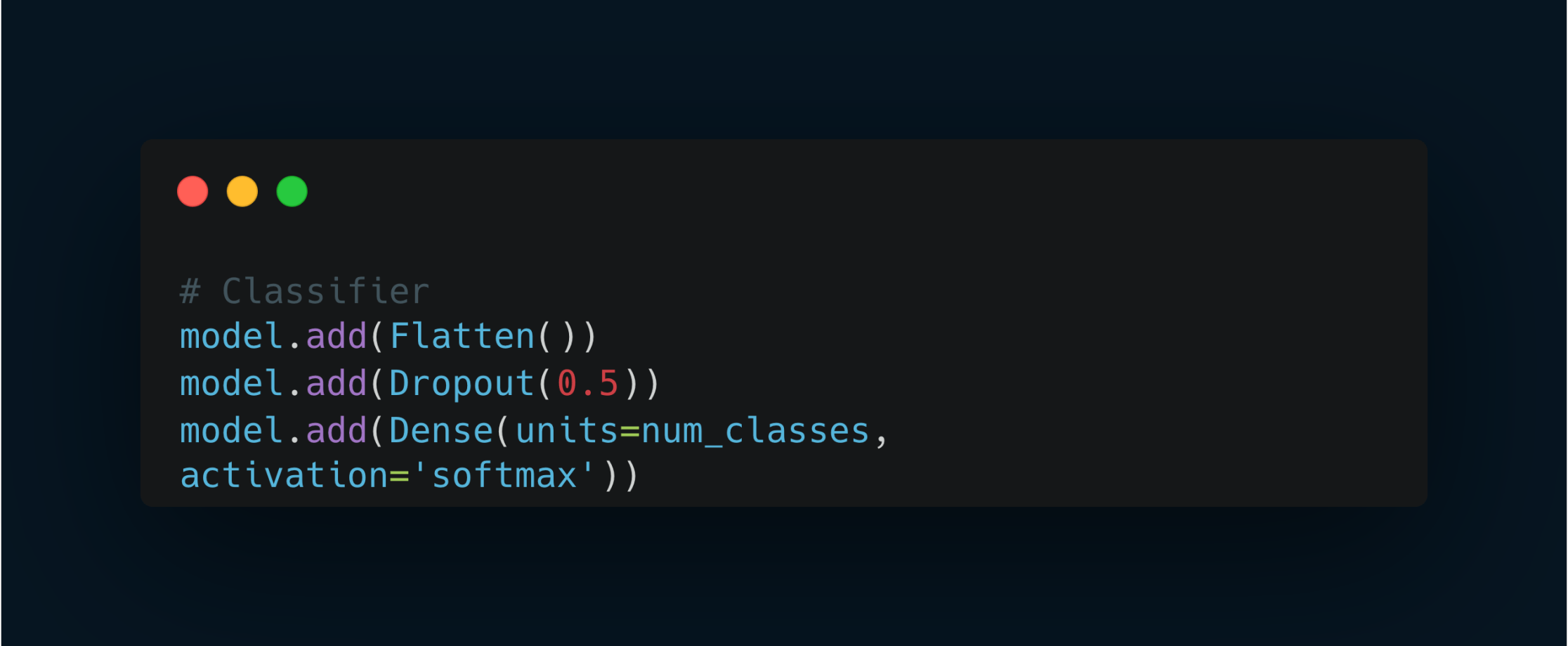

The next step is to add a classifier to the head of the model.

We are now going to flatten the output. We want everything in a continuous list of values.

That's what the Flatten layer does. It will give us a flat tensor.

Then, add a dropout for regularization.

The output layer has a size of 10. One for each of our possible digit values.

We need to pick softmax as the activation function because our problem is multi-class single-label classification.

The softmax ensures we get a probability distribution indicating the most likely digit in the image.

Compile the model

The next step is to compile the model.

Use Adam as the optimizer.

The loss is categorical cross-entropy: this is a multi-class single-label classification problem.

We also want to record the accuracy as the model trains.

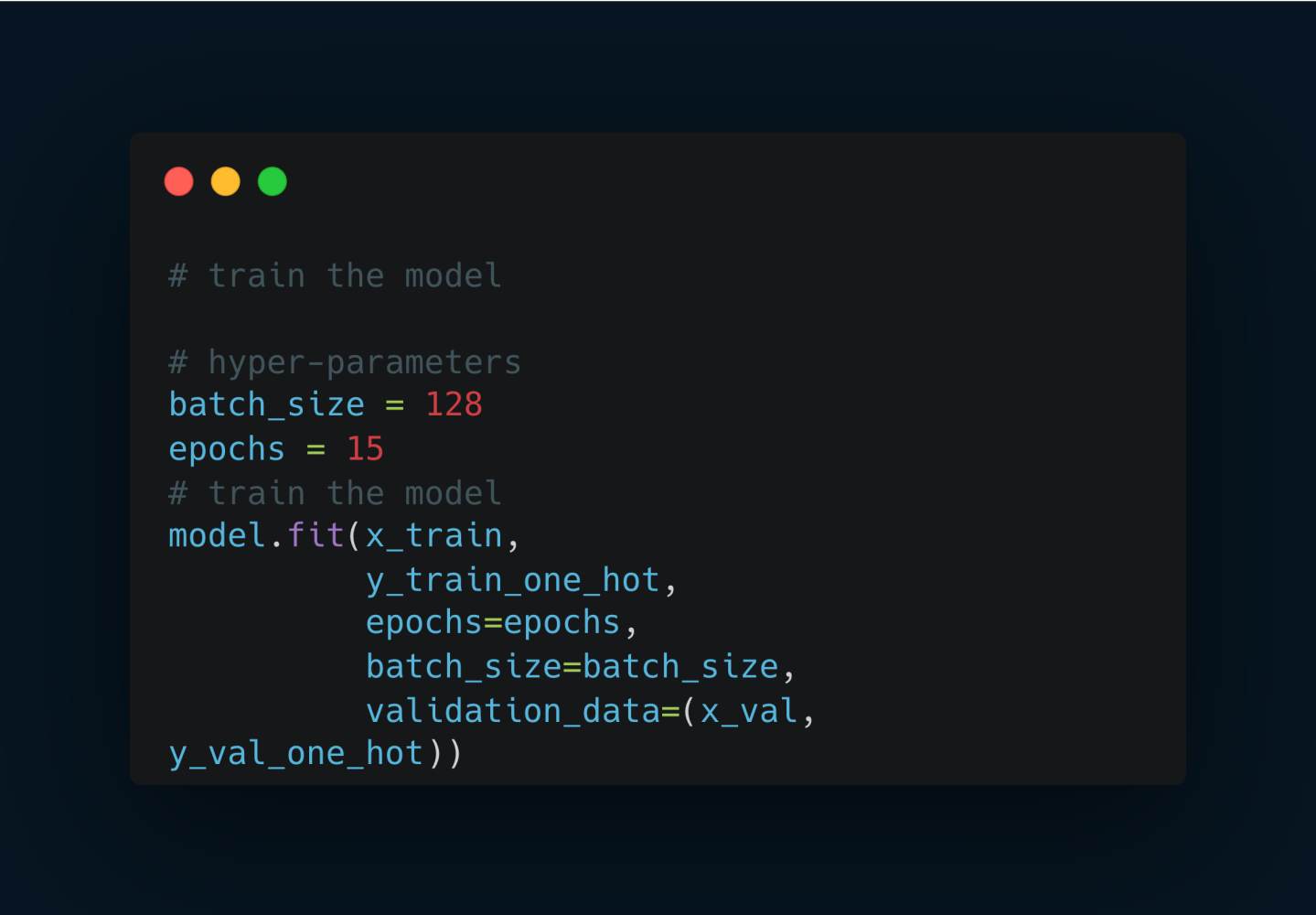

Train the model

When we fit the model, this starts training it.

Use batch size of 32 images and run 10 total epochs.

When stage is done, we have a fully trained model.

Evaluate model

One important step is to evaluate the model using test data set.

We need to evaluate the model with data that has never seen before to check the performance.

You can have the whole code here: https://github.com/juancolamendy/ml-tutorials/blob/main/keras/convnet_mnist.ipynb

If you like this, share it ♻️