Have you ever trained a machine learning model that performed exceptionally on your training data but failed miserably on real-world, unseen data?

If so, you've encountered overfitting, a common pitfall in machine learning that regularization techniques aim to solve.

Regularization is a fundamental concept in machine learning, designed to prevent overfitting and improve model generalization.

This guide will delve into what regularization is, why it's crucial, and how it can make or break your model's performance.

Keep reading!

Understanding Overfitting in ML/AI

Overfitting occurs when a model learns the noise in the training data, rather than the underlying patterns, resulting in poor performance on unseen data.

It means the model gets very well at recognizing data it has seen before, but poor at predicting outcomes with new data.

Overfitting can manifest in various ways, such as high variance in the model's predictions or excessive sensitivity to small changes in the input data.

Regularization techniques help strike a balance between fitting the training data well and maintaining the model's ability to generalize to new data.

It involves adding a penalty term to the objective function during training, which discourages the model from learning overly complex patterns.

This penalty limits the model's complexity and prevent it from fitting the noise in the training data.

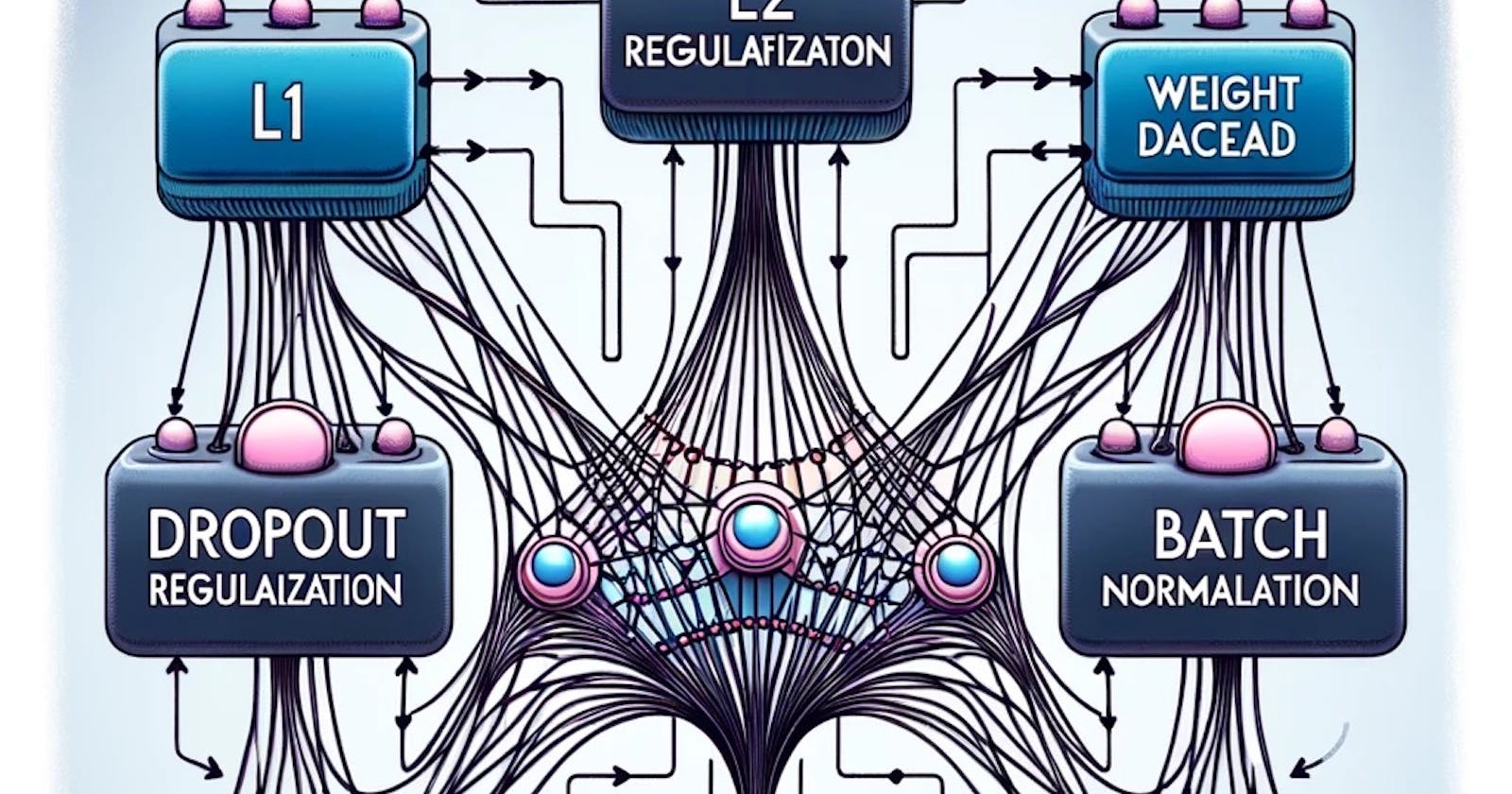

There are several types of regularization techniques commonly used in machine learning, including L1 regularization (Lasso), L2 regularization (Ridge), and dropout regularization.

Each technique has its own mathematical formulation and impact on the model's parameters. Understanding these techniques is essential for building robust and accurate machine learning models.

Types of Regularization Techniques

L1 Regularization (Lasso)

L1 regularization, also known as Lasso (Least Absolute Shrinkage and Selection Operator) regularization, introduces sparsity into the model feature coefficients.

This means it can set some feature coefficients to zero, effectively performing feature selection.

The mathematical basis of L1 regularization adds a penalty equal to the absolute value of the magnitude of coefficients.

The main advantage of L1 regularization is its ability to produce sparse models, reducing the complexity and making them easier to interpret.

However, in the presence of highly correlated features, L1 regularization tends to arbitrarily select one feature and discard the others.

L2 Regularization (Ridge)

L2 regularization, or Ridge, works differently than L1 by adding a penalty equal to the square of the magnitude of coefficients.

This type of regularization does not set coefficients to zero but rather reduces the impact of less important features.

The key difference from L1 is that all features remain part of the model, but their influence is balanced.

The squared terms in L2 encourage small, evenly distributed coefficient values, which helps improve model robustness.

This property makes L2 regularization useful when all features are believed to be relevant and there is no need for feature selection.

L2 regularization is also more stable than L1 regularization in the presence of highly correlated features.

It tends to shrink the correlated features together, rather than arbitrarily selecting one.

Dropout Regularization

In the context of neural networks, Dropout is a powerful regularization technique.

It involves randomly setting the outgoing edges of hidden units to zero at each update of the training phase.

This prevents units from co-adapting too much and forces the network to learn more robust features independently.

It also has an ensemble effect, as each training iteration can be seen as training a different sub-network.

Dropout is widely used in deep learning because it effectively helps neural networks avoid overfitting, especially in complex networks.

At test time, dropout is typically not applied, and the output is scaled by the dropout probability to maintain consistency with the expected value during training.

Implementing Regularization in Various Models

Regularization in Linear Models

In linear models, such as linear regression and logistic regression, regularization is typically implemented using L1 (Lasso) or L2 (Ridge) penalties.

These penalties are added to the objective function during training, constraining the model's parameters and preventing overfitting.

Regularization in linear models helps improve generalization performance, especially when the number of features is large compared to the number of training samples.

It also helps address multicollinearity, where features are highly correlated, by shrinking the coefficients of correlated features together.

Regularization in Tree-Based Models

Regularization can also be applied to tree-based models, such as Random Forest and Gradient Boosted Trees (e.g., XGBoost).

In these models, regularization is typically implemented through hyperparameters that control the complexity of the individual trees and the ensemble as a whole.

Some common regularization techniques in tree-based models include:

Limiting the maximum depth of the trees

Setting a minimum number of samples required to split a node

Limiting the maximum number of features considered at each split

Applying a learning rate (shrinkage) to the contributions of each tree

These regularization techniques help prevent overfitting by limiting the complexity of the individual trees and the ensemble.

They encourage the model to learn simpler and more generalizable patterns, rather than memorizing the noise in the training data.

Regularization in tree-based models is particularly important when dealing with high-dimensional data or noisy datasets.

It helps improve the model's robustness and generalization performance, leading to better predictions on unseen data.

Regularization in Neural Networks

Neural networks, particularly deep neural networks, are prone to overfitting due to their high capacity and ability to learn complex patterns.

Regularization techniques are essential for training neural networks that generalize well to new data.

Two commonly used regularization techniques in neural networks are dropout and early stopping:

Dropout, as discussed earlier, randomly sets a fraction of the input units to zero during training, preventing co-adaptation and overfitting.

Early stopping is a technique where the training is stopped before the model fully converges to the training data.

It involves monitoring the model's performance on a validation set during training and stopping the training when the performance on the validation set starts to degrade.

Early stopping prevents the model from overfitting to the training data and helps find the optimal point of generalization.

Other regularization techniques used in neural networks include weight decay (L2 regularization), batch normalization, and data augmentation.

Weight Decay: Often synonymous with L2 regularization, weight decay directly tackles the issue of overfitting by adding a penalty term to the loss function. This term is proportional to the square of the magnitude of the weights. By doing so, weight decay encourages the network to maintain smaller weight values, which simplifies the model and helps to prevent it from fitting the noise in the training data. This makes the model more likely to generalize well to new, unseen data.

Batch Normalization: This technique addresses the issue of internal covariate shift, where the distribution of each layer's inputs changes during training, as the parameters of the previous layers change.

It standardizes the inputs to a layer for each mini-batch. This stabilization makes the training process faster and more stable.

It acts as a regularizer by adding some noise to the layer inputs, akin to a mild form of dropout. This can help improve the generalization of the model, although its primary purpose is not regularization.

Data Augmentation: Particularly prevalent in tasks involving images and audio, data augmentation artificially increases the size and diversity of the training dataset by applying random, but realistic, transformations to the training data.

Examples include rotating, scaling, and cropping images or altering the pitch and speed of audio clips.

This method helps the model learn more robust features by exposing it to varied forms of its input data, thereby improving its ability to generalize well to new, unseen examples.

It can be seen as a form of training-time augmentation that enhances model generalization without changing the underlying model architecture.

These techniques help stabilize the training process, improve generalization, and reduce the sensitivity to hyperparameter choices.

Regularization is crucial for building robust and accurate neural networks, especially when dealing with complex architectures and limited training data.

Regularization Hyperparameters

Regularization techniques often involve hyperparameters that control the strength and behavior of the regularization.

These hyperparameters need to be tuned to achieve optimal performance and find the right balance between fitting the training data and maintaining model simplicity.

One of the key hyperparameters in regularization is the regularization strength, often denoted as λ (lambda).

In L1 and L2 regularization, λ controls the weight of the penalty term in the objective function.

Higher values of λ lead to stronger regularization and simpler models, while lower values allow for more complex models.

The optimal value of λ depends on the specific problem, data characteristics, and the desired trade-off between bias and variance.

Other regularization hyperparameters include the dropout probability in dropout regularization, which determines the fraction of input units to be randomly set to zero during training.

In tree-based models, hyperparameters such as the maximum depth of the trees, minimum samples per leaf, and learning rate also act as regularization controls.

Tuning these hyperparameters is crucial for achieving the best performance and preventing overfitting.

There are several techniques for hyperparameter optimization, including grid search, random search, and Bayesian optimization.

These techniques involve evaluating the model's performance for different combinations of hyperparameters and selecting the best configuration.

Cross-validation is often used in combination with hyperparameter tuning to estimate the model's generalization performance and avoid overfitting to the validation set.

Proper tuning of regularization hyperparameters is essential for building accurate and robust machine learning models.

It requires a systematic approach, domain knowledge, and experimentation to find the optimal configuration for a given problem.

Regularization and Model Evaluation

Evaluating the effectiveness of regularization is crucial for assessing the model's performance and generalization ability.

Several metrics and techniques can be used to measure the impact of regularization on model performance.

One common approach is to use cross-validation to estimate the model's performance on unseen data.

Cross-validation involves splitting the data into multiple subsets, training the model on a subset, and evaluating it on the remaining subsets.

This process is repeated multiple times, and the average performance across the folds is used as an estimate of the model's generalization performance.

Regularization can be evaluated by comparing the model's performance with and without regularization using cross-validation.

If regularization is effective, the model with regularization should have better generalization performance than the model without regularization.

Other metrics that can be used to assess regularization effectiveness include the model's complexity (e.g., number of non-zero coefficients in L1 regularization), the stability of the model's predictions across different training sets, and the model's ability to handle noisy or corrupted data.

Case studies and real-world examples can also provide valuable insights into the impact of regularization on model performance.

Analyzing the performance of regularized models in different domains, such as image classification, natural language processing, or recommender systems, can help understand the benefits and limitations of regularization techniques.

Proper model evaluation is essential for making informed decisions about regularization and selecting the most suitable technique for a given problem.

It requires a combination of quantitative metrics, domain knowledge, and practical considerations to assess the effectiveness of regularization and its impact on model performance.

FAQs on Regularization in ML/AI

What is the difference between L1 and L2 regularization? L1 regularization adds a penalty term proportional to the absolute value of the model's parameters, while L2 regularization adds a penalty term proportional to the square of the parameters.

L1 regularization leads to sparse parameters and performs feature selection, while L2 regularization shrinks the parameters towards zero without inducing sparsity.

L1 is useful for feature selection and interpretability, while L2 is more stable and suitable when all features are believed to be relevant.

How does regularization affect model accuracy and complexity? Regularization helps improve model accuracy by preventing overfitting and enhancing generalization performance.

By adding constraints to the model's parameters, regularization encourages the model to learn simpler and more robust patterns, reducing its sensitivity to noise and outliers.

However, excessive regularization can lead to underfitting, where the model becomes too simple and fails to capture the underlying patterns in the data.

Striking the right balance between regularization and model complexity is crucial for achieving optimal performance.

What are the best practices for tuning regularization parameters? Tuning regularization parameters requires a systematic approach and experimentation. Some best practices include:

Using cross-validation to estimate the model's generalization performance for different parameter values

Performing a grid search or random search over a range of parameter values to find the optimal configuration

Using domain knowledge and prior experience to narrow down the search space and select reasonable parameter ranges

Monitoring the model's performance on a validation set during training to detect overfitting and adjust the regularization strength accordingly

Considering the trade-off between model complexity and interpretability when selecting the regularization technique and parameter values

Conclusion

In this article, we explored the crucial role of regularization in machine learning to combat the common problem of overfitting.

Through techniques like L1 and L2 regularization, Dropout, weight decay, batch normalization, and data augmentation, we saw how they help models generalize better to new data, thus enhancing their real-world applicability.

Key takeaways include the importance of choosing the right regularization method depending on the specific features and complexity of the dataset.

Implementing these techniques properly can significantly improve a model's performance, making it robust against variations in unseen data.

Ultimately, regularization in machine learning not only refines the model's capacity to learn but also ensures it can perform reliably in various operational environments.

P.S.:

If you like this article, share it with others ♻️

Would help a lot ❤️

And feel free to follow me at @juancolamendy for more like this.

Twitter: https://twitter.com/juancolamendy